Application Lifecycle Management

01. Identify the number of containers created in the red pod.

ask : 3

root@controlplane:~# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

app 1/1 Running 0 2m31s 10.244.0.6 controlplane <none> <none>

fluent-ui 1/1 Running 0 2m31s 10.244.0.5 controlplane <none> <none>

red 0/3 ContainerCreating 0 2m23s <none> controlplane <none> <none>02. Identify the name of the containers running in the blue pod.

ask : teal, navy

root@controlplane:~# kubectl describe pod blue

Name: blue

Namespace: default

Priority: 0

Node: controlplane/10.1.241.3

Start Time: Fri, 21 Jan 2022 02:24:06 +0000

Labels: <none>

Annotations: <none>

Status: Running

IP: 10.244.0.10

IPs:

IP: 10.244.0.10

Containers:

teal:

Container ID: docker://62480f48b01c82bfdc685cc82ce84b4d49df150be1edb802bf3b6f67829da87f

Image: busybox

Image ID: docker-pullable://busybox@sha256:5acba83a746c7608ed544dc1533b87c737a0b0fb730301639a0179f9344b1678

Port: <none>

Host Port: <none>

Command:

sleep

4500

State: Running

Started: Fri, 21 Jan 2022 02:24:26 +0000

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-zkxkk (ro)

navy:

Container ID: docker://dd2bd7c3569127e18e29d5206e2b81904971277a028677aec9d6c88e63f61c50

Image: busybox

Image ID: docker-pullable://busybox@sha256:5acba83a746c7608ed544dc1533b87c737a0b0fb730301639a0179f9344b1678

Port: <none>

Host Port: <none>

Command:

sleep

4500

State: Running

Started: Fri, 21 Jan 2022 02:24:30 +0000

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-zkxkk (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

default-token-zkxkk:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-zkxkk

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 49s default-scheduler Successfully assigned default/blue to controlplane

Normal Pulling 43s kubelet Pulling image "busybox"

Normal Pulled 34s kubelet Successfully pulled image "busybox" in 8.759609472s

Normal Created 31s kubelet Created container teal

Normal Started 29s kubelet Started container teal

Normal Pulling 29s kubelet Pulling image "busybox"

Normal Pulled 29s kubelet Successfully pulled image "busybox" in 295.298114ms

Normal Created 27s kubelet Created container navy

Normal Started 25s kubelet Started container navy

03. Create a multi-container pod with 2 containers.

Use the spec given below.

If the pod goes into the crashloopbackoff then add sleep 1000 in the lemon container.

- Name: yellow

- Container 1 Name: lemon

- Container 1 Image: busybox

- Container 2 Name: gold

- Container 2 Image: redis

# Hint

Use the command kubectl run to create a pod definition file and add 2nd container.apiVersion: v1

kind: Pod

metadata:

name: yellow

spec:

containers:

- name: lemon

image: busybox

command:

- sleep

- "1000"

- name: gold

image: redis

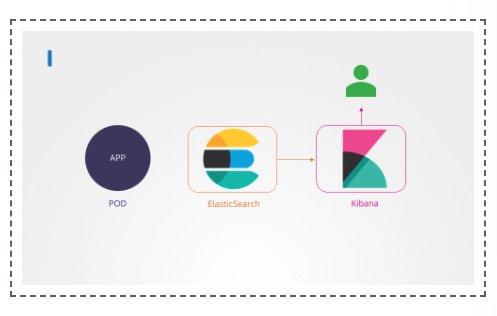

04. We have deployed an application logging stack in the elastic-stack namespace. Inspect it.

Before proceeding with the next set of questions, please wait for all the pods in the elastic-stack namespace to be ready. This can take a few minutes.

Ok

05. Once the pod is in a ready state, inspect the Kibana UI using the link above your terminal.

There shouldn't be any logs for now.

We will configure a sidecar container for the application to send logs to Elastic Search.

NOTE: It can take a couple of minutes for the Kibana UI to be ready after the Kibana pod is ready.

You can inspect the Kibana logs by running:

kubectl -n elastic-stack logs kibanaOk

06. Inspect the app pod and identify the number of containers in it.

It is deployed in the elastic-stack namespace.

ask : 1

root@controlplane:~# kubectl get pod -n elastic-stack

NAME READY STATUS RESTARTS AGE

app 1/1 Running 0 11m

elastic-search 1/1 Running 0 11m

kibana 1/1 Running 0 11m07. The application outputs logs to the file /log/app.log. View the logs and try to identify the user having issues with Login.

Inspect the log file inside the pod.

kubectl -n elastic-stack exec -it app -- cat /log/app.log

kubectl exec -it app -- sh

08. Edit the pod to add a sidecar container to send logs to Elastic Search.

Mount the log volume to the sidecar container.

Only add a new container. Do not modify anything else. Use the spec provided below.

|

apiVersion: v1

kind: Pod

metadata:

name: app

namespace: elastic-stack

labels:

name: app

spec:

containers:

- name: app

image: kodekloud/event-simulator

volumeMounts:

- mountPath: /log

name: log-volume

- name: sidecar

image: kodekloud/filebeat-configured

volumeMounts:

- mountPath: /var/log/event-simulator/

name: log-volume

volumes:

- name: log-volume

hostPath:

# directory location on host

path: /var/log/webapp

# this field is optional

type: DirectoryOrCreate

09. Inspect the Kibana UI. You should now see logs appearing in the Discover section.

You might have to wait for a couple of minutes for the logs to populate. You might have to create an index pattern to list the logs. If not sure check this video: https://bit.ly/2EXYdHf

Kubernetes CKAD - Kibana Dashboard

www.loom.com

total 48

drwxr-xr-x 3 root root 4096 Jan 24 08:40 .

drwx------ 1 root root 4096 Jan 24 08:40 ..

-rw-rw-rw- 1 root root 336 Jan 18 19:38 app.yaml

-rw-rw-rw- 1 root root 351 Jan 18 19:38 elasticsearch-pod.yaml

-rw-rw-rw- 1 root root 302 Jan 18 19:38 elasticsearch-service.yaml

drwxr-xr-x 2 root root 4096 Jan 24 08:40 fluent-example

-rw-rw-rw- 1 root root 271 Jan 18 19:38 fluent-ui-service.yaml

-rw-rw-rw- 1 root root 223 Jan 18 19:38 fluent-ui.yaml

-rw-rw-rw- 1 root root 668 Jan 18 19:38 fluentd-config.yaml

-rw-rw-rw- 1 root root 279 Jan 18 19:38 kibana-pod.yaml

-rw-rw-rw- 1 root root 198 Jan 18 19:38 kibana-service.yaml

-rw-rw-rw- 1 root root 338 Jan 18 19:38 webapp-fluent.yaml# app.yaml

--

apiVersion: v1

kind: Pod

metadata:

name: app

namespace: elastic-stack

labels:

name: app

spec:

containers:

- name: app

image: kodekloud/event-simulator

volumeMounts:

- mountPath: /log

name: log-volume

volumes:

- name: log-volume

hostPath:

path: /var/log/webapp

type: DirectoryOrCreate

# elasticsearch-service.yaml

--

kind: Service

apiVersion: v1

metadata:

name: elasticsearch

namespace: elastic-stack

spec:

selector:

name: elastic-search

type: NodePort

ports:

- port: 9200

targetPort: 9200

nodePort: 30200

name: port1

- port: 9300

targetPort: 9300

nodePort: 30300

name: port2

# elasticsearch-pod.yaml

--

apiVersion: v1

kind: Pod

metadata:

name: elastic-search

namespace: elastic-stack

labels:

name: elastic-search

spec:

containers:

- name: elastic-search

image: docker.elastic.co/elasticsearch/elasticsearch:6.4.2

ports:

- containerPort: 9200

- containerPort: 9300

env:

- name: discovery.type

value: single-node

# kibana-pod.yaml

--

apiVersion: v1

kind: Pod

metadata:

name: kibana

namespace: elastic-stack

labels:

name: kibana

spec:

containers:

- name: kibana

image: kibana:6.4.2

ports:

- containerPort: 5601

env:

- name: ELASTICSEARCH_URL

value: http://elasticsearch:9200

##

# kibana-service.yaml

--

apiVersion: v1

kind: Service

metadata:

name: kibana

namespace: elastic-stack

spec:

selector:

name: kibana

type: NodePort

ports:

- port: 5601

targetPort: 5601

nodePort: 30601# fluentd-config.yaml

--

apiVersion: v1

kind: ConfigMap

metadata:

name: fluentd-config

data:

td-agent.conf: |

<match td.*.*>

@type tdlog

apikey YOUR_API_KEY

auto_create_table

buffer_type file

buffer_path /var/log/td-agent/buffer/td

<secondary>

@type file

path /var/log/td-agent/failed_records

</secondary>

</match>

<match debug.**>

@type stdout

</match>

<match log.*.*>

@type stdout

</match>

<source>

@type forward

</source>

<source>

@type http

port 8888

</source>

<source>

@type debug_agent

bind 127.0.0.1

port 24230

</source>

##

# fluent-ui.yaml

--

apiVersion: v1

kind: Pod

metadata:

name: fluent-ui

labels:

name: fluent-ui

spec:

containers:

- name: fluent-ui

image: kodekloud/fluent-ui-running

ports:

- containerPort: 80

- containerPort: 24224

# fluent-ui-service.yaml

--

kind: Service

apiVersion: v1

metadata:

name: fluent-ui-service

spec:

selector:

name: fluent-ui

type: NodePort

ports:

- port: 80

targetPort: 80

nodePort: 30080

name: ui

- port: 24224

targetPort: 24224

nodePort: 30224

name: receiver

##

# fluent-ui-service.yaml

--

apiVersion: v1

kind: Pod

metadata:

name: app

labels:

name: app

spec:

containers:

- name: app

image: kodekloud/event-simulator

volumeMounts:

- mountPath: /log

name: log-volume

# Volume to store the logs

volumes:

- name: log-volume

hostPath:

path: /var/log/webapp

type: DirectoryOrCreate

##

# /fluent-example#td-agent-config.yaml

--

apiVersion: v1

kind: ConfigMap

metadata:

name: td-agent-config

data:

td-agent.conf: |

<match td.*.*>

@type tdlog

apikey YOUR_API_KEY

auto_create_table

buffer_type file

buffer_path /var/log/td-agent/buffer/td

<secondary>

@type file

path /var/log/td-agent/failed_records

</secondary>

</match>

<match debug.**>

@type stdout

</match>

<source>

@type forward

</source>

<source>

@type http

port 8888

</source>

<source>

@type debug_agent

bind 127.0.0.1

port 24230

</source>

<source>Bookmark

Communicate Between Containers in the Same Pod Using a Shared Volume

This page shows how to use a Volume to communicate between two Containers running in the same Pod. See also how to allow processes to communicate by sharing process namespace between containers. Before you begin You need to have a Kubernetes cluster, and t

kubernetes.io

'CKA (Certified Kubernetes Administrator) > Kode Kloud' 카테고리의 다른 글

| 05.Cluster Maintenance - OS Upgrades (0) | 2022.01.21 |

|---|---|

| 04.Application Lifecycle Management - Init Containers (0) | 2022.01.21 |

| 4.Application Lifecycle Management - Secrets (0) | 2022.01.21 |

| 4.Application Lifecycle Management - Env Variables (0) | 2022.01.21 |

| 4.Application Lifecycle Management - Commands and Arguments (0) | 2022.01.20 |